# Int-HRL

This is the official repository for [Int-HRL: Towards Intention-based Hierarchical Reinforcement Learning](https://collaborative-ai.org/publications/penzkofer24_ncaa/)

Int-HRL uses eye gaze from human demonstration data on the Atari game Montezuma's Revenge to extract human player's intentions and converts them to sub-goals for Hierarchical Reinforcement Learning (HRL). For further details take a look at the corresponding paper.

## Dataset

Atari-HEAD: Atari Human Eye-Tracking and Demonstration Dataset available at [https://zenodo.org/record/3451402#.Y5chr-zMK3J](https://zenodo.org/record/3451402#.Y5chr-zMK3J)

To pre-process the Atari-HEAD data run [Preprocess_AtariHEAD.ipynb](Preprocess_AtariHEAD.ipynb), yielding the `all_trials.pkl` file needed for the following steps.

## Sub-goal Extraction Pipeline

1. [RAM State Labeling](RAMStateLabeling.ipynb): annotate Atari-HEAD data with room id and level information, as well as agent and skull location

2. [Subgoals From Gaze](SubgoalsFromGaze.ipynb): run sub-goal proposal extraction by generating saliency maps

3. [Alignment with Trajectory](TrajectoryMatching.ipynb): run expert trajectory to get order of subgoals

## Intention-based Hierarchical RL Agent

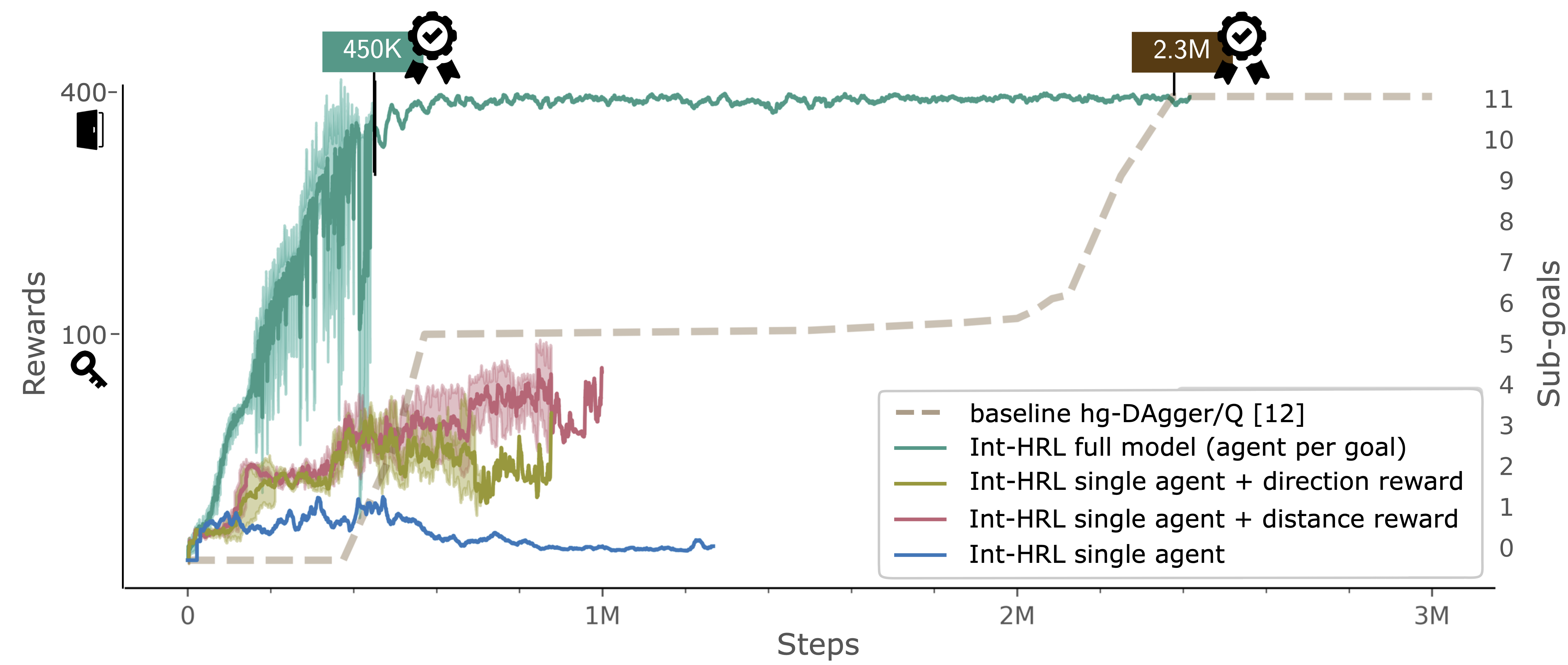

The Int-HRL agent is based on the hierarchically guided Imitation Learning method (hg-DAgger/Q), where we adapted code from [https://github.com/hoangminhle/hierarchical_IL_RL](https://github.com/hoangminhle/hierarchical_IL_RL)

Due to the novel sub-goal extraction pipeline, our agent does not require experts during training and is more than three times more sample efficient compared to hg-DAgger/Q.

To run the full agent with 12 separate low-level agents for sub-goal execution, run [agent/run_experiment.py](agent/run_experiment.py), for single agents (one low-level agent for all sub-goals) run [agent/single_agent_experiment.py](agent/single_agent_experiment.py).

## Extension to Venture and Hero

under construction

## Citation

Please consider citing these paper if you use Int-HRL or parts of this repository in your research:

```

@article{penzkofer24_ncaa,

author = {Penzkofer, Anna and Schaefer, Simon and Strohm, Florian and Bâce, Mihai and Leutenegger, Stefan and Bulling, Andreas},

title = {Int-HRL: Towards Intention-based Hierarchical Reinforcement Learning},

journal = {Neural Computing and Applications (NCAA)},

year = {2024},

pages = {1--7},

doi = {10.1007/s00521-024-10596-2},

volume = {36}

}

@inproceedings{penzkofer23_ala,

author = {Penzkofer, Anna and Schaefer, Simon and Strohm, Florian and Bâce, Mihai and Leutenegger, Stefan and Bulling, Andreas},

title = {Int-HRL: Towards Intention-based Hierarchical Reinforcement Learning},

booktitle = {Proc. Adaptive and Learning Agents Workshop (ALA)},

year = {2023},

doi = {10.48550/arXiv.2306.11483},

pages = {1--7}

}

```