Official code for "VSA4VQA: Scaling a Vector Symbolic Architecture to Visual Question Answering on Natural Images" published at CogSci'24

| dataset.py | ||

| generate_query_masks.py | ||

| gqa_all_attributes.json | ||

| gqa_all_relations_map.json | ||

| gqa_all_vocab_classes.json | ||

| LICENSE | ||

| pipeline.png | ||

| query_masks.png | ||

| README.md | ||

| relations.zip | ||

| requirements.txt | ||

| run_programs.py | ||

| utils.py | ||

| VSA4VQA_examples.ipynb | ||

VSA4VQA

Official code for VSA4VQA: Scaling a Vector Symbolic Architecture to Visual Question Answering on Natural Images published at CogSci'24

Installation

# create environment

conda create -n ssp_env python=3.9 pip

conda activate ssp_env

conda install pytorch torchvision pytorch-cuda=11.8 -c pytorch -c nvidia -y

sudo apt install libmysqlclient-dev

# install requirements

pip install -r requirements.txt

# install CLIP

pip install git+https://github.com/openai/CLIP.git

# setup jupyter notebook kernel

python -m ipykernel install --user --name=ssp_env

Get GQA Programs

using code by https://github.com/wenhuchen/Meta-Module-Network

- Download github repo MMN

- Add

gqa-questionsfolder with GQA json files - Run Preprocessing

python preprocess.py create_balanced_programs - Save generated programs to data folder:

testdev_balanced_inputs.json

trainval_balanced_inputs.json

testdev_balanced_programs.json

trainval_balanced_programs.json

GQA dictionaries:

gqa_all_attributes.jsonandgqa_all_vocab_classesare also adapted from https://github.com/wenhuchen/Meta-Module-Network

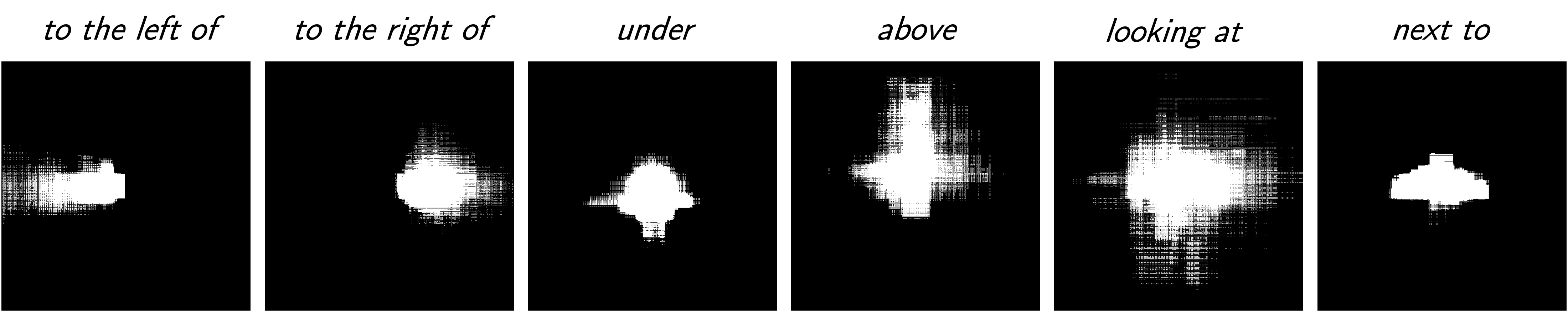

Generate Query Masks

All 37 generated query masks are available in relations.zip as numpy files

# loading a query mask with numpy

import numpy as np

rel = 'to_the_right_of'

path = f'relations/{rel}.npy'

mask = np.load(path)

mask = mask > 0.05 # binary mask

If you want to run the generation process, run:

python generate_query_masks.py

- generates full_relations_df.pkl if not already present

- generates query masks for all relations with more than 1000 samples

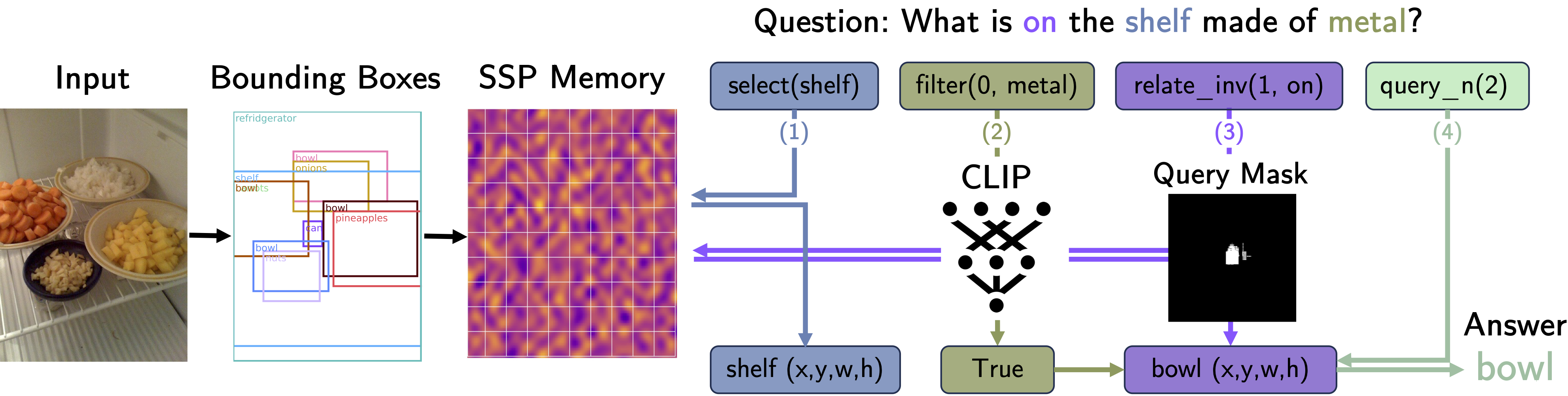

Pipeline

Execute Pipeline for all samples in GQA: train_balanced (with TEST=False) or validation_balanced (with TEST=True)

python run_programs.py

For visualizing samples with all pipeline steps see VSA4VQA_examples.ipynb

Citation

Please consider citing this paper if you use VSA4VQA or parts of this publication in your research:

@inproceedings{penzkofer24_cogsci,

author = {Penzkofer, Anna and Shi, Lei and Bulling, Andreas},

title = {VSA4VQA: Scaling A Vector Symbolic Architecture To Visual Question Answering on Natural Images},

booktitle = {Proc. 46th Annual Meeting of the Cognitive Science Society (CogSci)},

year = {2024},

pages = {}

}