5.6 KiB

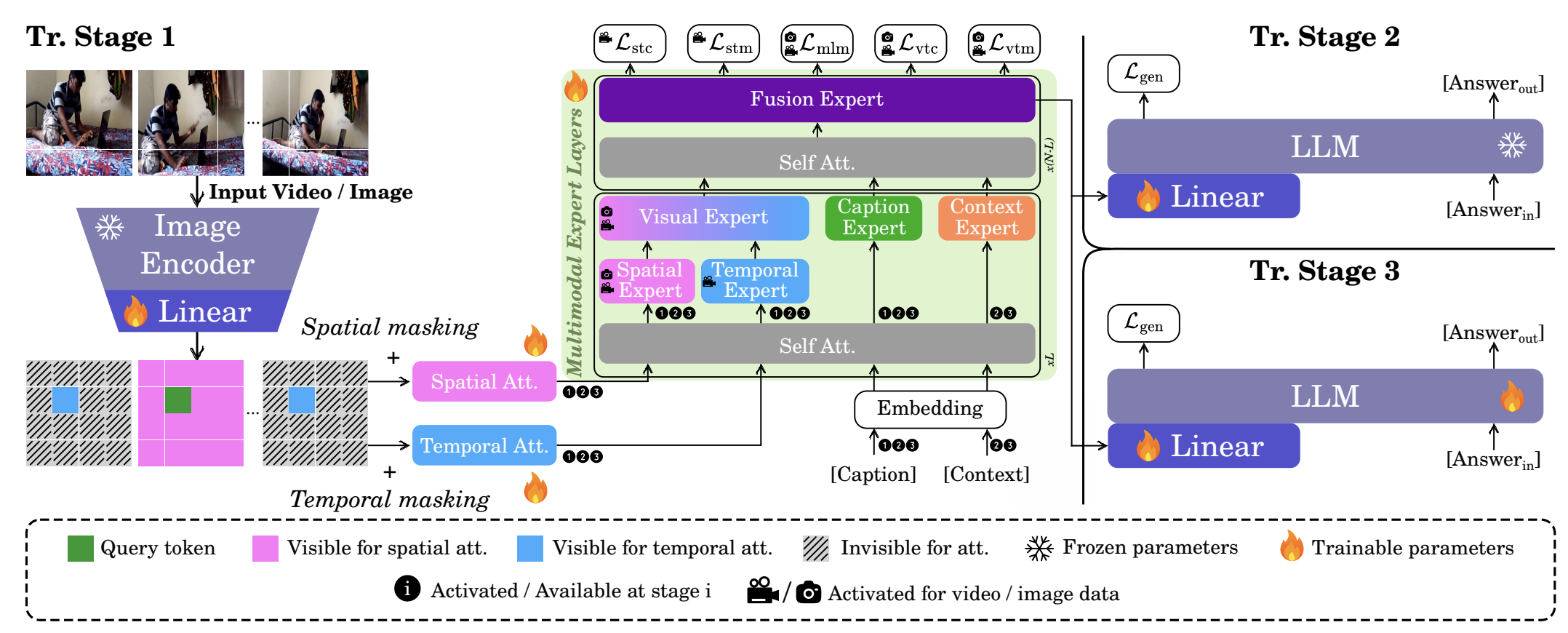

V2Dial  : Unification of Video and Visual Dialog via Multimodal Experts

: Unification of Video and Visual Dialog via Multimodal Experts

Adnen Abdessaied, Anna Rohrbach, Marcus Rohrbach, Andreas Bulling

CVPR 2025, Nashville, TN, USA

[Paper]

Citation

If you find our code useful or use it in your own projects, please cite our paper:

@InProceedings{v2dial_abdessaied,

author = {Abdessaied, Adnen and Rohrbach, Anna and Rohrbach, Marcus and Bulling, Andreas},

title = {{V2Dial: Unification of Video and Visual Dialog via Multimodal Experts}},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}

Table of Contents

Setup and Dependencies

Create a conda environment and install dependencies

conda create -n v2dial python=3.9

conda activate v2dial

conda install pytorch==2.2.0 torchvision==0.17.0 torchaudio==2.2.0 pytorch-cuda=11.8 -c pytorch -c nvidia

conda install -c huggingface transformers

pip install evaluate wandb glog pyhocon

Download Data

❗ We do NOT own any of the data used in this projects. For legal reasons, we only provide links to where they could be downloaded.

Champagne

- Textual data can be accessed here

- The video url can be used to download the raw videos if needed. This can done using the folowing code

WebVid-2M

- Please follow the instructions/hints from this repo the download the dataset

CC3M

- Please follow these instructions to download the dataset

AVSD

- The textual data of the three versions can be downloaded from AVSD-DSTC7, AVSD-DSTC8 and AVSD-DSTC10, respectively

- The videos can be obtained from here

VisDial v1.0

- Both textual and image data can be obtained from here

After the data is downloaded, you need to set up their paths correctly in the config-files in \config

Training

We trained our model on 8 Nvidia A100 GPUs on all different stages.

Stage 1

Run

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python main_stage_1.py \

--mode train \

--tag stage_1 \

Stage 2

Run

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python main_stage_2.py \

--mode train \

--tag stage_2 \

Stage 3

Run

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python main_stage_3.py \

--mode train \

--tag stage_3 \

Response Generation

AVSD-DSTC7

- Set

dstc=7in the.conffile of your trained networks. in The default setting, can find this underlogs/exeriment_tag/code/config/v2_dial_stage_x.conf - Generate the responses

./generate_parallel_avsd.sh v2dial/stage_x results_avsd_dstc7_v2dial_stage_x generate logs/stage_x/tag_to_be_used 7

- All responses will be saved in

output/dstc7/

AVSD-DSTC8

- Set

dstc=8in the.conffile of your trained networks. in The default setting, can find this underlogs/exeriment_tag/code/config/v2_dial_stage_x.conf - Generate the responses

./generate_parallel_avsd.sh v2dial/stage_x results_avsd_dstc8_v2dial_stage_x generate logs/stage_x/tag_to_be_used 8

- All responses will be saved in

output/dstc8/

AVSD-DSTC10

- Set

dstc=10in the.conffile of your trained networks. in The default setting, can find this underlogs/exeriment_tag/code/config/v2_dial_stage_x.conf - Generate the responses

./generate_parallel_avsd.sh v2dial/stage_x results_avsd_dstc10_v2dial_stage_x generate logs/stage_x/tag_to_be_used 10

- All responses will be saved in

output/dstc10/

VisDial

- Generate the responses

./generate_parallel_visdial.sh v2dial/stage_x results_visdial_v2dial_stage_x generate logs/stage_x/tag_to_be_used

- All responses will be saved in

output/visdial/

Results

AVSD

To evaluate the results of AVSD, please use the tool Executing the eval_tool_dstc7 of AVSD-DSTC7 eval_tool_dstc and eval_tool_dstc10 on the generated reponses from the previous stage.

VisDial

Use the script eval_visdial.py for evaluation.

Acknowledgements

We thank the authors of miniGPT4-Video, VindLU, BLIP-2 for providing their codebases that greatly influenced this work.